Yet another post on ML 😛

Recently, I was working on an assignment with my group, about the needs and uses of specialized hardware for ML. I browsed through a few articles on the internet about this and decided to compile them here.

Why specialized hardware is important for Machine Learning?

One may ask, why a GPU is needed?

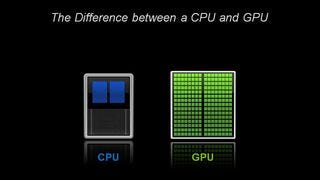

There are broadly 2 stages to ML. The first stage is Training and the second stage is Inference. Training a ML model requires more computational power and resource. Especially when working with Neural Networks, it is essential to process huge amounts of data to train the model. This process usually involves some heavy matrix calculations. For instance, visual data are stored in the form of matrix and hence to process them, the processor must have good matrix computational capacity which translates to having more number of small and simple cores. A CPU generally has less no. of cores viz. 4 cores or 8. But a GPU has far more no. of cores in them viz. 400 cores. But core of CPU is much more versatile than a GPU core. Yeah. That’s right. And that is exactly what makes a GPU core more suitable for matrix computation. Because it doesn’t require much logical processing. Just a huge no. of partly independent arithmetic operation. So, the more no. of cores, the more amount of parallelism hence much faster matrix computation. This is why GPU is used to handle the training part. So, GPU is a specialized hardware used for ML. The inference part, however, is not as resource intensive as the training part for obvious reason. Hence, the inference can be done in a less powerful system. After the training is done on the GPU, the weights and biases are saved and then using those the inference is done is on a less powerful system. GPU is not the only hardware can be specialized for ML. Recent innovations on FPGAs (Field Programmable Gate Arrays) and ASICs (Application Specific Integrated Circuits) have made them viable for use in ML. So above all, the motive of specialized hardware is to optimize the utilization of the available resources and ensure that no resource is under-utilized.

What are different ways of implementing ML algorithms in specialized hardware?

As mentioned above, GPU is not the only specialized hardware that is used for ML. The ML algorithms can be programmed on the hardware on a application level or on a hardware level. A HDL (Hardware Description Language) like Verilog or VHDL is used to program them on the hardware.

The use of FPGAs (Field-Programmable Gate Arrays) and ASIC (Application Specific Integrated Circuits) is also gaining traction in the field of ML. FPGA’s main advantage is it’s re-programmability of the logic path and low latency and the high speed owing to its extremely wide internal memory bandwidth.

ASIC isn’t customizable as an FPGA is. But once, the logic path is designed, it runs very fast. In an FPGA, the logic path is set on an application level. Whereas, on the ASIC, it is done on a hardware level. Hence the speed up. Designing an ASIC is a very skilled work and requires lot of money and resources. And, once the logic path is set, it cannot be changed. But the ML algorithms, evolve and better over time and cannot be updated on an ASIC. Google Cloud TPU (Tensor Processing Unit) is based on the ASIC technology. The major innovator in field of FPGA is Xilinx.

As for the GPU, Nvidia leads the innovation. Nvidia has released CUDA which is a parallel computing platform for their GPUs and the cuDNN library which contains optimized and highly tuned implementations of some frequently used ML algorithms like pooling, forward and back propagation. So hardwares are sometimes assisted by some specialized libraries to help implement the ML algorithms in them.

Advantages of FPGA implementation?

FPGA have some fundamental advantages compared to other such hardware.

- Compared to an ASIC, a FPGA is re-programmable whereas the ASIC is not. The logic path is on a FPGA is written using a hardware description language (HDL). Hence, a FPGA’s logic path is easily re-programmed by erasing the previously written logic. In an ASIC, however, the logic is implemented in the hardware, i.e, the ASIC is assembled in such a way that it is ‘application specific’. The way the components are assembled on that is specific for each use.

- FPGA has extremely low latency. This is very useful in cases where even a 1ms delay is not desirable.

- The modern FPGAs , innovated by Xilinx, are very fast. They also have a superior energy efficiency (performance/watt) compared to GPUs. Although, the peak performance isn’t as good, in cases where the power resource is important, FPGAs can be used.

- Input/Output (I/O) in an FPGA is much faster because it has transceivers which have direct interface for data sources. The data can be made to go directly to the FPGA rather than the host processor. Hence, there is also a deployment flexibility.

I hope this write up can help someone understand the basics of the topic. :)

Machine Learning, Its Implementation in Specialized hardware was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.